Caching is one of the easiest and most effective ways to improve application performance with minimal effort.

By temporarily storing frequently accessed data, caching enables faster retrieval without repeatedly querying the underlying data source.

However, caching is not without challenges.

If not implemented carefully, it can lead to stale data, increased complexity and issues like the cache stampede problem.

In this blog post, we’ll take a closer look at the cache stampede problem and explore solutions.

Cache Stampede

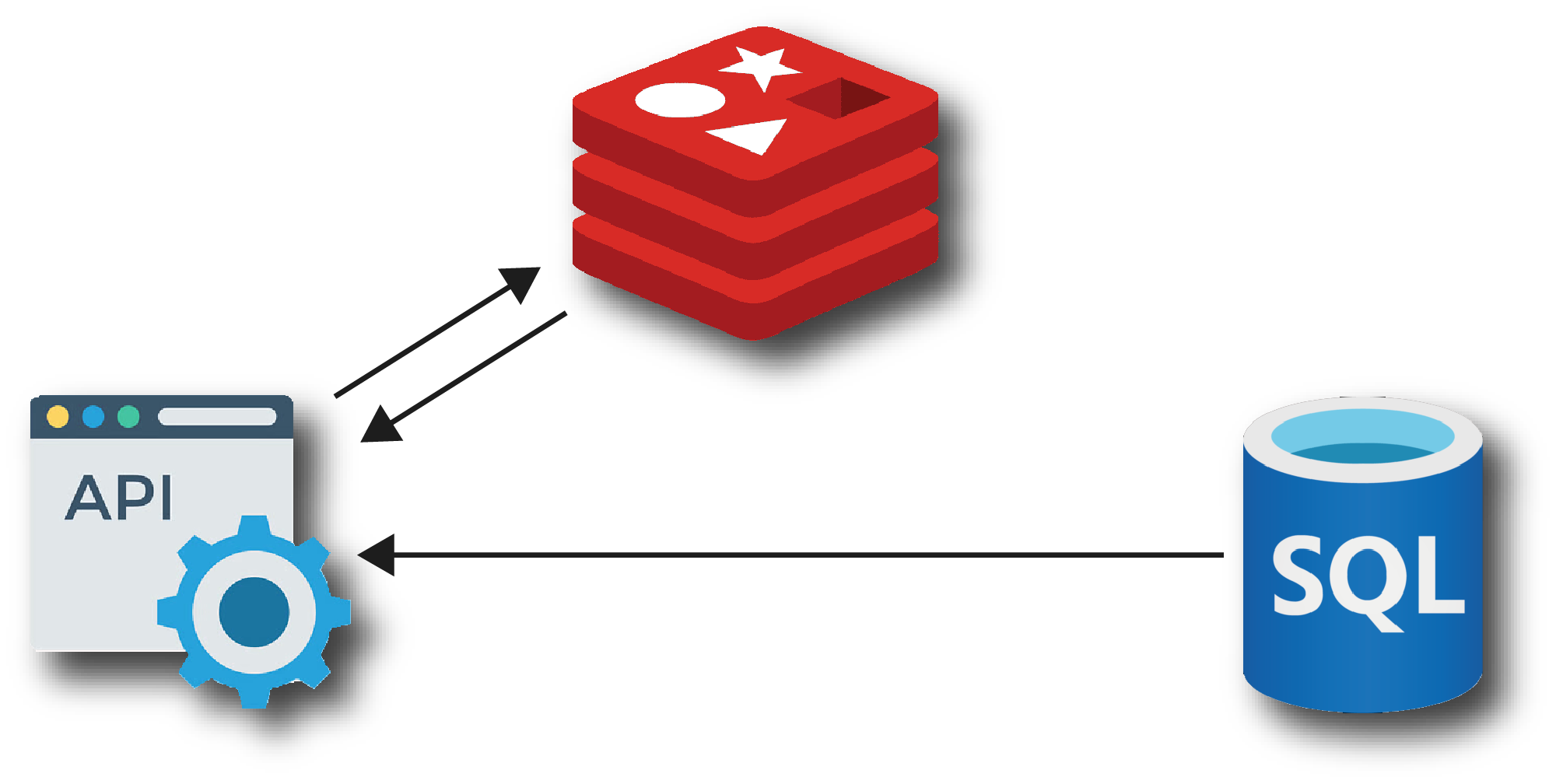

The concept of caching is simple, when certain data is accessed frequently, we add a caching layer to store it. This reduces latency, lowers costs and protects underlying resources from unnecessary load.

The typical flow for a cached request is straightforward:

- If the data is present in the cache, it is returned immediately.

- If the data is not in the cache, the app fetches it from the database or API, stores it in the cache for future requests and returns it to the client.

It all works well, until it doesnt..

Cache stampede occurs when multiple requests hit an expired cache entry at the same time. Instead of a single request refreshing the data, all requests simultaneously query the underlying data source.

This can overload your database or API, resulting in higher latency and timeouts.

To avoid it, caching mechanism should implement strategies like:

- Refresh Ahead: Refresh cache entries before they expire.

- Request coalescing: Ensure that only one request repopulates the cache while other requests wait for the result.

Without these strategies, caching can actually reduce system stability rather than improve it.

Getting Started

Let’s start with a simple example using basic in-memory caching:

builder.Services.AddMemoryCache();

// ...

app.MapGet("products/{id}", async (Guid id, IProductService productService) =>

{

var response = await productService.GetById(id);

return Results.Ok(response);

});public class ProductService(ApplicationDbContext dbContext, IMemoryCache memoryCache) : IProductService

{

public async Task<Product> GetById(Guid id)

{

var key = $"product:{id}";

var response = await memoryCache.GetOrCreateAsync<Product>(

key,

async _ => await dbContext.Products.FirstOrDefaultAsync(x => x.Id == id));

return response;

}

}In this example, we registered in-memory caching with DI, making it available throughout the application.

We also defined a simple endpoint that fetches a product by its Id and ProductService that first checks the cache before querying the database.

GetOrCreateAsync ensures that if the product is already cached, it is returned immediately. Otherwise, it fetches the product from the database and stores it in the cache.

If multiple requests arrive before the cache is populated, all of them will hit the database simultaneously, causing a cache stampede.

Manual Approach

The simplest way to mitigate cache stampedes is to use a manual locking mechanism.

The most straightforward solution to introduce lock:

- The first request acquires the lock, performs the database call, and populates the cache.

- Other requests wait until the lock is released, then use the cached value.

private static SemaphoreSlim Semaphore = new(1, 1);

public async Task<Product> GetById(Guid id)

{

var key = $"product:{id}";

var cached = memoryCache.Get<Product>(key);

if (cached is not null)

{

return cached;

}

var locked = await Semaphore.WaitAsync(54000);

if (!locked)

{

return default;

}

try

{

cached = memoryCache.Get<Product>(key);

if (cached is not null)

{

return cached;

}

var data = await GetProductFromDb(id);

memoryCache.Set(key, data);

return data;

}

finally

{

Semaphore.Release();

}

}In this approach, if the product is already cached, it is returned immediately.

Otherwise, the lock is acquired and the cache is double-checked in case another request populated it in the meantime. If it’s still missing, the database is queried, the cache is updated, the lock is released, and the data is returned.

While this method is safe, it is far from efficient.

Using a single semaphore for all keys means requests for different product IDs are blocked unnecessarily.

A better approach is locking per key.

Unlike a single lock, a per-key lock only blocks requests for the specific key being refreshed. Requests for other keys can continue without blocking, improving throughput and reducing contention in high-traffic systems.

Alternatively, you can use a refresh-ahead strategy, where cache entries are refreshed before they expire. This ensures users never encounter a cache miss, providing smoother performance without locking overhead.

Caching Libraries

You could also rely on libraries that handle cache stampedes for you, we have two popular options now:

Both provide built-in stampede protection along with many additional features.

I particularly favor Fusion Cache, as it includes every feature I’ve ever needed in a caching library.

Using libraries like Fusion Cache is not only more convenient, but also better optimized. They handle everything efficiently, from cold starts onward, ensuring smooth performance under load.

Conclusion

To mitigate cache stampedes, you have several strategies.

Choosing the right approach depends on your application’s scale and complexity.

Fortunately, libraries like Fusion Cache allow us to handle cache stampedes and add advanced features to optimize caching in our application.

If you want to check out examples I created, you can find the source code here:

Source CodeI hope you enjoyed it, subscribe and get a notification when a new blog is up!